Architecting a Testable Web Service in Spark Framework

TLDR; Architecting a Web Service using Spark Framework to support more Unit testing and allow the inclusion of HTTP @Test methods in the build without deploying the application. Create API as a POJO. Start Spark in @BeforeClass, stop it in @AfterClass, make simple HTTP calls.

Background to the Spark and REST Web App Testing

I’m writing a REST Web App to help me manage my online training courses.

I’m building it iteratively and using TDD and trying to architect it to be easily testable at multiple layers in the architectural stack.

Previously, with RestMud I had to pull it apart to make it more testable after the fact, and I’m trying to avoid that now.

I’m using the Spark Java Framework to build the app because it is very simple, and lightweight and I can package the whole application into a stand alone jar for running anywhere that a JVM exists with minimal installation requirements on the user. Which means I can also use this for training.

TDD is pretty simple when you only have domain objects as they are isolated and easy to build and test.

With a Web app we face other complexities:

- needs to be running to accept http requests

- often needs to be deployed to a web/app server

Spark has an embedded Jetty instance so can start up as its own HTTP/App server, which is quite jolly. But that generally implies that I have to deploy it and run it, prior to testing the REST API.

If you look at the examples on Spark web site it uses a modern Java style with lambdas which makes it a little more difficult to Unit test the code in the lambdas.

Making it a little more testable

To make it a little more testable, in the lambda I can delegate off to a POJO:

get("/courses", (request, response) -> {

return coursesApi.getCourses(request,response);

});

This was the approach I took in RestMud and it means, in theory, that I have a much smaller layer (routing) which I haven’t unit tested.

But the request and response objects are from the Spark framework and they are instantiated with an HttpServletRequest and HttpServletResponse therefore if I pass the Spark objects through to my API I create a much harder situation for my API Unit testing and I probably have to mock the HttpServletRequest and HttpServletResponse to instantiate a Spark Request and Response and I tightly couple my API processing to the Spark framework.

I prefer, where possible to avoid mocking, and I really want simpler objects to represent the Request and Response.

Simpler Interfaces

I’m creating an interface that my API requires - this will probably end up having many similar methods to the Spark Request and Response but won’t have the complexity of dealing with the Servlet classes and won’t require as robust error handling (since that’s being done by Spark).

get("/courses", (request, response) -> {

return coursesApi.getCourses(

new SparkApiRequest(request),

new SparkApiResponse(response));

});

I’ve introduced a SparkApiRequest which implements my simpler ApiRequest interface and knows how to bridge the gap between Spark and my API.

I’m coding my API to use ApiRequest and therefore have created a TestApiRequest object which implements ApiRequest to use in my API Unit @Test methods e.g. and this is ugly at the moment, it is a first draft @Test method and haven’t refactored it to create the various methods that will help me make my test code more literate and readable

@Test

public void canCreateCoursesViaApiWithACourseList(){

Gson gson = new Gson();

CoursesApi api = new CoursesApi();

CourseList courses = new CourseList();

Course course = new CourseBuilder("title", "author").build();

courses.addCourse(course);

ApiRequest apiRequest = new TestApiRequest();

ApiResponse apiResponse = new TestApiResponse();

String sentRequest = gson.toJson(courses);

apiRequest.setBody(sentRequest);

System.out.println(sentRequest);

Assert.assertEquals("", api.setCourses(apiRequest, apiResponse));

Assert.assertEquals(201,apiResponse.getStatus());

Assert.assertEquals(1, api.courses.courseCount());

}

In the above I create the domain objects, use Gson to serialise them into a payload, create the TestApi request and response, and pass those into my API.

This has the advantage that the API is instantiated as required for testing - Spark is static so is a little harder to control for Unit testing.

I also have direct access to the running application objects so I can check the application state in the Unit test, which I can’t do with an HTTP test, I would have to make a second request to get the list of courses.

This allows me to build up a set of @Test methods that can drive the API, without requiring a server instantiation.

But this leaves the routing and HTTP request handling as a gap in my testing.

Routing and HTTP request handling testing

With RestMud I take a similar approach but I’m working a level down where the API calls the Game, and I test at the Game. Here I haven’t introduced a Course Management App level, I’m working at an API level. I might refactor this out later.

With RestMud I test at the API with a seperate set of test data, which is generated by walkthrough unit tests at the game level. (read about that here).

I wanted to take a simpler approach with this App, and since Spark has a built in Jetty server it is possible for me to add HTTP tests into the build.

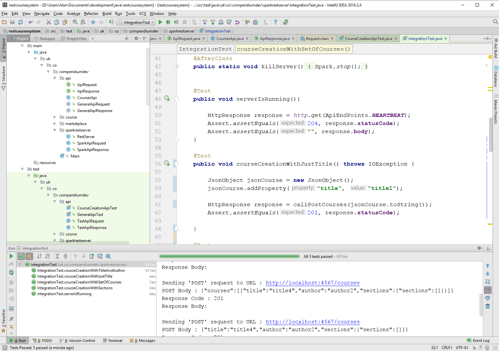

For some of you decrying “That’s not a Unit Test” that’s fine, I have a class called IntegrationTest, which at some point will become a package filled with these things.

To avoid deploying I create an @Test method which starts and stops the Spark jetty server:

@BeforeClass

public static void createServer(){

RestServer server = new RestServer();

host = "localhost:" + Spark.port();

http = new HttpMessageSender("http://" + host);

}

@AfterClass

public static void killServer(){

Spark.stop();

}

I pushed all my server code into a RestServer object rather than have it all reside in main, but could just as easily have used:

String [] args = {};

Main.main(args);

// RestServer server = new RestServer();

Because Spark is statically created and managed so as soon as I define a routing, Spark starts up and creates a server and runs my API.

Then it is a simple matter to write simple @Test methods that use HTTP:

@Test

public void serverIsRunning(){

HttpResponse response = http.get(ApiEndPoints.HEARTBEAT);

Assert.assertEquals(204, response.statusCode);

Assert.assertEquals("", response.body);

}

I have an HttpMessageSender abstraction which also uses an HttpRequestSender.

HttpMessageSenderis a more ’logical’ level that builds up a set of headers and has information about base URLs etc.HttpRequestSenderis a physical level

In my book Automating and Testing a REST API I have a similar HTTP abstraction and it uses REST Assured as the HTTP implementation library.

For my JUnit Run Integration @Test, I decided to drop down to a simpler library and avoid dependencies so I’m experimenting with the Java .net HttpURLConnection.

How is this working out?

Early days, but thus far it allows me to TDD the API functionality with @Test methods which create payloads and set headers which I can pass in to the API level.

I can also TDD the HTTP calls, and this helps me mitigate HTTP routing errors and errors related to my transformation of Spark Request and Responses to API Request and Responses.

This is also a lot faster than having a build process for the Unit tests, and then package and deploy, startup app, run integration tests, close down app.

This also means that (much though I love using Postman), I’m not having to manually interact with the API as I build it. I can make the actual HTTP calls as I develop.

This does not mean that I will not manually interact with the application to test it, and that I will not automate a separate set of HTTP API execution. I will… but not yet.

At some point I’ll also release the source for all of this to GitHub.